Quick Roundup

- Latest Headlines

- Highlights

- Archive

- Recent comments

- All-Time Popular Stories

- Hot Topics

- Latest Members

- Categories

| Type | Title | Author | Replies |

Last Post |

|---|---|---|---|---|

| Page | Tux Machines IRC Logs 2021 Archive | Roy Schestowitz | 26/12/2022 - 4:29am | |

| Story | Nate Graham: KDE 2021 roadmap mid-year update | Roy Schestowitz | 1 | 27/06/2022 - 5:08pm |

| Story | digiKam 7.7.0 is released | Roy Schestowitz | 27/06/2022 - 5:02pm | |

| Story | Mozilla Firefox 102 Is Now Available for Download, Adds Geoclue Support on Linux | Marius Nestor | 1 | 27/06/2022 - 5:00pm |

| Story | Dilution and Misuse of the "Linux" Brand | Roy Schestowitz | 27/06/2022 - 3:14pm | |

| Story | Samsung, Red Hat to Work on Linux Drivers for Future Tech | Roy Schestowitz | 27/06/2022 - 3:12pm | |

| Story | How the Eyüpsultan district of Turkey uses GNU/Linux | Roy Schestowitz | 1 | 27/06/2022 - 3:02pm |

| Story | today's howtos | Roy Schestowitz | 27/06/2022 - 3:00pm | |

| Story | Red Hat Hires a Blind Software Engineer to Improve Accessibility on Linux Desktop | Roy Schestowitz | 27/06/2022 - 2:49pm | |

| Story | Today in Techrights | Roy Schestowitz | 27/06/2022 - 2:48pm |

digiKam 7.7.0 is released

Submitted by Roy Schestowitz on Monday 27th of June 2022 05:02:30 PM Filed under

After three months of active maintenance and another bug triage, the digiKam team is proud to present version 7.7.0 of its open source digital photo manager. See below the list of most important features coming with this release.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 10947 reads

PDF version

PDF version

Dilution and Misuse of the "Linux" Brand

Submitted by Roy Schestowitz on Monday 27th of June 2022 03:14:10 PM Filed under

-

Linux Foundation Rewards StepSecurity’s Impact on CI/CD Pipeline Security Fixes for Critical Open Source Projects [Ed: Having just participated in a FUD attack together with a Microsoft proxy, not to mention issued a report with it]

-

Cardano Roundup: Lace Wallet Announcement, Hoskinson Proposes Self-Regulation, and Linux Foundation Membership [Ed: The "Linux" Foundation misuses or sells the Linux brand, diluting the name and the project's identity]

-

Can SONiC be the Linux of Networking? [Ed: The Register now abuses the Linux brand to describe something of Microsoft, which is attacking Linux]

-

Kuro: An Unofficial Microsoft To-Do Desktop Client

Microsoft says that they love Linux and open-source, but we still do not have native support for a lot of its products on Linux.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22775 reads

PDF version

PDF version

Samsung, Red Hat to Work on Linux Drivers for Future Tech

Submitted by Roy Schestowitz on Monday 27th of June 2022 03:12:20 PM Filed under

The metaverse is expected to uproot system design as we know it, and Samsung is one of many hardware vendors re-imagining data center infrastructure in preparation for a parallel 3D world.

Samsung is working on new memory technologies that provide faster bandwidth inside hardware for data to travel between CPUs, storage and other computing resources. The company also announced it was partnering with Red Hat to ensure these technologies have Linux compatibility.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22549 reads

PDF version

PDF version

today's howtos

Submitted by Roy Schestowitz on Monday 27th of June 2022 03:00:28 PM Filed under

-

How to install go1.19beta on Ubuntu 22.04 – NextGenTips

In this tutorial, we are going to explore how to install go on Ubuntu 22.04

Golang is an open-source programming language that is easy to learn and use. It is built-in concurrency and has a robust standard library. It is reliable, builds fast, and efficient software that scales fast.

Its concurrency mechanisms make it easy to write programs that get the most out of multicore and networked machines, while its novel-type systems enable flexible and modular program constructions.

Go compiles quickly to machine code and has the convenience of garbage collection and the power of run-time reflection.

In this guide, we are going to learn how to install golang 1.19beta on Ubuntu 22.04.

Go 1.19beta1 is not yet released. There is so much work in progress with all the documentation.

-

molecule test: failed to connect to bus in systemd container - openQA bites

Ansible Molecule is a project to help you test your ansible roles. I’m using molecule for automatically testing the ansible roles of geekoops.

-

How To Install MongoDB on AlmaLinux 9 - idroot

In this tutorial, we will show you how to install MongoDB on AlmaLinux 9. For those of you who didn’t know, MongoDB is a high-performance, highly scalable document-oriented NoSQL database. Unlike in SQL databases where data is stored in rows and columns inside tables, in MongoDB, data is structured in JSON-like format inside records which are referred to as documents. The open-source attribute of MongoDB as a database software makes it an ideal candidate for almost any database-related project.

This article assumes you have at least basic knowledge of Linux, know how to use the shell, and most importantly, you host your site on your own VPS. The installation is quite simple and assumes you are running in the root account, if not you may need to add ‘sudo‘ to the commands to get root privileges. I will show you the step-by-step installation of the MongoDB NoSQL database on AlmaLinux 9. You can follow the same instructions for CentOS and Rocky Linux.

-

An introduction (and how-to) to Plugin Loader for the Steam Deck. - Invidious

-

Self-host a Ghost Blog With Traefik

Ghost is a very popular open-source content management system. Started as an alternative to WordPress and it went on to become an alternative to Substack by focusing on membership and newsletter.

The creators of Ghost offer managed Pro hosting but it may not fit everyone's budget.

Alternatively, you can self-host it on your own cloud servers. On Linux handbook, we already have a guide on deploying Ghost with Docker in a reverse proxy setup.

Instead of Ngnix reverse proxy, you can also use another software called Traefik with Docker. It is a popular open-source cloud-native application proxy, API Gateway, Edge-router, and more.

I use Traefik to secure my websites using an SSL certificate obtained from Let's Encrypt. Once deployed, Traefik can automatically manage your certificates and their renewals.

In this tutorial, I'll share the necessary steps for deploying a Ghost blog with Docker and Traefik.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22153 reads

PDF version

PDF version

Red Hat Hires a Blind Software Engineer to Improve Accessibility on Linux Desktop

Submitted by Roy Schestowitz on Monday 27th of June 2022 02:49:40 PM Filed under

Accessibility on a Linux desktop is not one of the strongest points to highlight. However, GNOME, one of the best desktop environments, has managed to do better comparatively (I think).

In a blog post by Christian Fredrik Schaller (Director for Desktop/Graphics, Red Hat), he mentions that they are making serious efforts to improve accessibility.

Starting with Red Hat hiring Lukas Tyrychtr, who is a blind software engineer to lead the effort in improving Red Hat Enterprise Linux, and Fedora Workstation in terms of accessibility.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22221 reads

PDF version

PDF version

Today in Techrights

Submitted by Roy Schestowitz on Monday 27th of June 2022 02:48:28 PM Filed under

- [Meme] The World Wide Web Has Become Bloated and Slow

- Gemini Graduating to First-Class Citizen

- Links 27/06/2022: New Curl and Okular Digital Signing

- Links 27/06/2022: GNOME Design Rant

- IRC Proceedings: Sunday, June 26, 2022

- Links 26/06/2022: Linux 5.19 RC4

- Links 26/06/2022: New Stable Kernels and Freedom of the Press on Trial

- Links 26/06/2022: Windows vs GNU/Linux and Mixtile Edge 2 Kit

- Links 26/06/2022: Shotcut 22.06 and More Netflix Layoffs

- IRC Proceedings: Saturday, June 25, 2022

- This Past Week the Linux Foundation Ran a Massive Misinformation Campaign in Texas and It Paid Media/Publishers to Repeat Lies/Spin

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 21913 reads

PDF version

PDF version

Android Leftovers

Submitted by Rianne Schestowitz on Monday 27th of June 2022 01:32:40 PM Filed under

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22080 reads

PDF version

PDF version

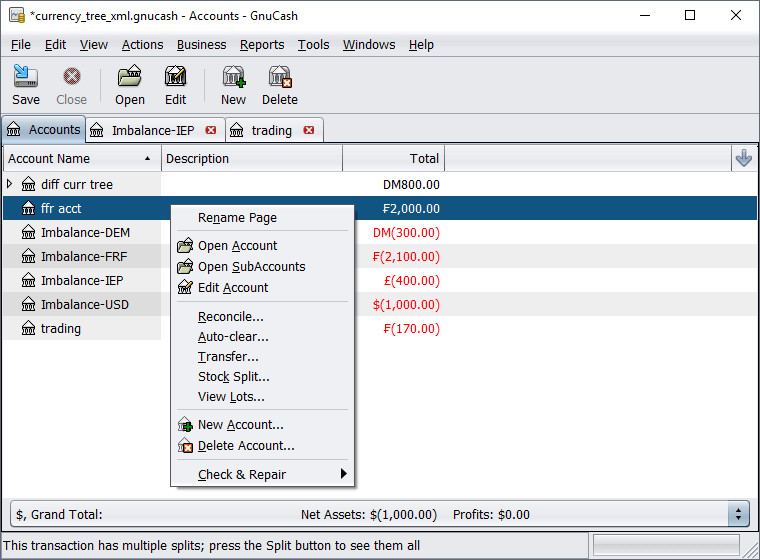

GnuCash 4.11

Submitted by Rianne Schestowitz on Monday 27th of June 2022 01:25:30 PM Filed under

GnuCash is a personal and small business finance application, freely licensed under the GNU GPL and available for GNU/Linux, BSD, Solaris, Mac OS X and Microsoft Windows. It’s designed to be easy to use, yet powerful and flexible. GnuCash allows you to track your income and expenses, reconcile bank accounts, monitor stock portfolios and manage your small business finances. It is based on professional accounting principles to ensure balanced books and accurate reports.

GnuCash can keep track of your personal finances in as much detail as you prefer. If you are just starting out, use GnuCash to keep track of your checkbook. You may then decide to track cash as well as credit card purchases to better determine where your money is being spent. When you start investing, you can use GnuCash to help monitor your portfolio. Buying a vehicle or a home? GnuCash will help you plan the investment and track loan payments. If your financial records span the globe, GnuCash provides all the multiple-currency support you need.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22073 reads

PDF version

PDF version

today's leftovers

Submitted by Roy Schestowitz on Monday 27th of June 2022 12:28:45 PM Filed under

-

curl 7.84.0 inside every box

Welcome to take the next step with us in this never-ending stroll.

-

Mars Probe Running OS Developed in Windows 98 Receives Software Update in Space [Ed: With a budget like this, there's no excuse for running vandalOS from Microsoft]

-

ESP32 board with 150Mbps 4G LTE modem also supports RS485, CAN Bus, and relay expansion - CNX Software

LILYGO has designed another ESP32 board with a 4G LTE modem with the LILYGO T-A7608E-H & T-A7608SA-H variants equipped with respectively SIMCom A7608SA-H for South America, New Zealand, and Australia, and SIMCom A7608E-H for the EMEA, South Korean, and Thai markets, both delivering up to 150 Mbps download and 50 Mbps upload speeds.

The board also supports GPS, includes a 18650 battery holder, and features I/O expansion headers that support an add-on board with RS485 and CAN bus interfaces, in a way similar to the company’s earlier TTGO T-CAN485 board with ESP32, but no cellular connectivity.

-

ICE-V Wireless FPGA board combines Lattice Semi iCE40 UltraPlus with WiFi & BLE module - CNX Software

Lattice Semi ICE40 boards are pretty popular notably thanks to the availability of open-source tools. ICE-V Wireless is another ICE40 UltraPlus FPGA board that also adds wireless support through an ESP32-C3-MINI-1 module with WiFi 4 and Bluetooth LE connectivity.

Designed by QWERTY Embedded Design, the board also comes with 8MB PSRAM, offers three PMOD expansion connectors, plus a header for GPIOs, and supports power from USB or a LiPo battery (charging circuit included).

-

Felipe Borges: See you in GUADEC!

After two virtual conferences, GUADEC is finally getting back to its physical form. And there couldn’t be a better place for us to meet again than Mexico! If you haven’t registered yet, hurry up!

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 21911 reads

PDF version

PDF version

Red Hat Servers, and AlmaLinux

Submitted by Roy Schestowitz on Monday 27th of June 2022 12:27:10 PM Filed under

-

What is distributed consensus for site reliability engineering? | Opensource.com

With microservices, containers, and cloud native architectures, almost every application today is going to be a distributed application. Distributed consensus is a core technology that powers distributed systems.

Distributed consensus is a protocol for building reliable distributed systems. You cannot rely on "heartbeats" (signals from your hardware or software to indicate that they're operating normally) because network failures are inevitable.

There are some inherent problems to highlight when it comes to distributed systems. Hardware will fail. Nodes in a distributed system can randomly fail.

This is one of the important assumptions you have to make before you design a distributed system. Network outages are inevitable. You cannot always guarantee 100% network connectivity. Finally, you need a consistent view of any node within a distributed system.

-

How Cloud AI Developer Services empower developers

It’s been almost 11 years since Marc Andreessen famously posted, “Software is eating the world.” Over the last decade in IT, we’ve seen some amazing transformations happen – from companies fundamentally changing the way they deliver software to how we as consumers use web and mobile applications and services in our daily lives. Research shows that the average person in the U.S. now uses at least four to five software programs a day to do their job – partially due to the pandemic.

Developing software has emerged as perhaps the most critical business function for companies as they undergo digital transformation to adapt to fast-paced change, delight their customers, and stand out from competitors. As such, the role of software developers has evolved at such a rapid pace that it is now more common to deliver software u

-

What is next for Open Source- Alma Linux and beyond | Business Insider India

When Red Hat announced that it will no longer support open-source CentOS, a wave of disturbance was caused throughout the open-source community. In response, the open-source community formed an alliance and started building alternatives for CentOS.

While they initially named it Lenix, which was a paid offering and was initially restricted to hosting providers, its eventual success led the community to make it 100% free and open-source and renamed it to AlmaLinux.

Today, AlmaLinux has delivered over three releases with millions of downloads. It has become an alternative platform for developers for CentOS Stream and Red Hat Enterprise Linux. Even though its user-facing features are limited, its speed and agility have made it an attractive avenue for the open-source community.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22414 reads

PDF version

PDF version

Digitally signing PDF documents in Linux: with hardware token & Okular

Submitted by Roy Schestowitz on Monday 27th of June 2022 12:13:57 PM Filed under

We are living in 2022. And it is now possible to digitally sign a PDF document using libre software. This is a love letter to libre software projects, and also a manual.

For a long time, one of the challenges in using libre software in ‘enterprise’ environments or working with Government documents is that one will eventually be forced to use a proprietary software that isn’t even available for a libre platform like GNU/Linux. A notorious use-case is digitally signing PDF documents.

Recently, Poppler (the free software library for rendering PDF; used by Evince and Okular) and Okular in particular has gained a lot of improvements in displaying digital signature and actually signing a PDF document digitally (see this, this, this, this, this and this). When the main developer Albert asked for feedback on what important functionality would the community like to see incorporated as part this effort; I had asked if it would be possible to use hardware tokens for digital signature. Turns out, poppler uses nss (Network Security Services, a Mozilla project) for managing the certificates, and if the token is enrolled in NSS database, Okular should be able to just use it.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22203 reads

PDF version

PDF version

today's howtos

Submitted by Roy Schestowitz on Monday 27th of June 2022 11:14:39 AM Filed under

-

How to Install Fail2ban on Ubuntu 22.04

Fail2ban is a free and open-source IPS that helps administrators safeguard Linux servers against brute-force assaults. Python-based Fail2ban has filters for Apache2, SSH, FTP, etc. Fail2ban blocks the IP addresses of fraudulent login attempts.

Fail2ban scans service log files (e.g. /var/log/auth.log) and bans IP addresses that reveal fraudulent login attempts, such as too many wrong passwords, seeking vulnerabilities, etc. Fail2ban supports iptables, ufw, and firewalld. Set up email alerts for blocked login attempts.

In this guide, we’ll install and configure Fail2ban to secure Ubuntu 22.04. This article provides fail2ban-client commands for administering Fail2ban service and prisons.

-

How to install software packages on Red Hat Enterprise Linux (RHEL) | Enable Sysadmin

There's a lot of flexibility in how you install an application on Linux. It's partly up to the software's developer to decide how to deliver it to you. In many cases, there's more than one "right" way to install something.

-

What is the /etc/hosts file in Linux – TecAdmin

/etc/hosts is a text file on a computer that maps hostnames to IP addresses. It is used for static name resolution, which is not updated automatically like the Domain Name System (DNS) records.

/etc/hosts are usually the first file checked when resolving a domain name, so it can be used to block websites or redirect users to different websites.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22494 reads

PDF version

PDF version

Accessibility in Fedora Workstation

Submitted by Roy Schestowitz on Monday 27th of June 2022 10:24:55 AM Filed under

The first concerted effort to support accessibility under Linux was undertaken by Sun Microsystems when they decided to use GNOME for Solaris. Sun put together a team focused on building the pieces to make GNOME 2 fully accessible and worked with hardware makers to make sure things like Braille devices worked well. I even heard claims that GNOME and Linux had the best accessibility of any operating system for a while due to this effort. As Sun started struggling and got acquired by Oracle this accessibility effort eventually trailed off with the community trying to pick up the slack afterwards. Especially engineers from Igalia were quite active for a while trying to keep the accessibility support working well.

But over the years we definitely lost a bit of focus on this and we know that various parts of GNOME 3 for instance aren’t great in terms of accessibility. So at Red Hat we have had a lot of focus over the last few years trying to ensure we are mindful about diversity and inclusion when hiring, trying to ensure that we don’t accidentally pre-select against underrepresented groups based on for instance gender or ethnicity. But one area we realized we hadn’t given so much focus recently was around technologies that allowed people with various disabilities to make use of our software. Thus I am very happy to announce that Red Hat has just hired Lukas Tyrychtr, who is a blind software engineer, to lead our effort in making sure Red Hat Enterprise Linux and Fedora Workstation has excellent accessibility support!

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22257 reads

PDF version

PDF version

Android Leftovers

Submitted by Rianne Schestowitz on Monday 27th of June 2022 10:05:33 AM Filed under

-

YouTube Music album interface gets a new makeover on Android tablets | Android Central

-

How to download Instagram reels in gallery on Android?

-

How to see your WiFi password on Android

-

Twitter videos now have closed captions on Android – Phandroid

-

Nothing looks like this new Android phone! You can own it before anyone else | Express.co.uk

-

T-Mobile brings stable Android 12 goodies to its OnePlus Nord N200 5G - PhoneArena

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 21964 reads

PDF version

PDF version

Raspberry Pi Zero Prints Giant Pictures with Thermal Receipt Printer

Submitted by Roy Schestowitz on Monday 27th of June 2022 09:59:07 AM Filed under

It’s no secret that thermal receipt printers can print much more than receipts, but this Raspberry Pi project, created by a maker known as -PJFry- on Reddit, has taken the idea to a new extreme. With the help of a Raspberry Pi Zero, they’ve coded an application to print huge, poster-sized images (opens in new tab) one strip at a time on their thermal printer.

Inspiration for this project came from similar online projects where users print large-scale images using regular printers or thermal printers like the one used in this project. In this case, however, -PJFry- coded the project application from scratch to work on the Pi Zero. It works by taking an image and breaking it into pieces that fit across the width of the receipt printer and printing it one strip at a time. Then, these strips can be lined up to create a full-sized image.

It is the only microelectronics project we can find that -PJFry- has shared, but it’s clear they have a great understanding of our favorite SBC to craft something this creative from scratch. According to -PJFry-, the project wasn’t created for efficiency but more for fun as a proof of concept. The result is exciting and provides an artistic take on the Raspberry Pi’s potential.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22158 reads

PDF version

PDF version

Excellent Utilities: Extension Manager - Browse, Install and Manage GNOME Shell Extensions

Submitted by Rianne Schestowitz on Monday 27th of June 2022 09:58:53 AM Filed under

This series highlights best-of-breed utilities. We cover a wide range of utilities including tools that boost your productivity, help you manage your workflow, and lots more besides.

Part 22 of our Linux for Starters series explains how to install GNOME shell extensions using Firefox. Because of a bug, our guide explains that it’s not possible to install the extensions using the Snap version of Firefox. Instead, you need to install the deb package for Firefox (or use a different web browser).

However, if you have updated to Ubuntu 22.04, you’ll find that trying to install Firefox using apt won’t install a .deb version. Instead, it fetches a package that installs the Firefox Snap. You can install a Firefox deb from the Mozilla Team PPA. But there has to be an easier way to install and manage GNOME Shell Extensions.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22239 reads

PDF version

PDF version

Mozilla Firefox 102 Is Now Available for Download, Adds Geoclue Support on Linux

Submitted by Marius Nestor on Monday 27th of June 2022 09:47:14 AM Filed under

Firefox 102 is now here to introduce support for Geoclue on Linux, a D-Bus service that provides geolocation services when needed by certain websites.

It also improves the Picture-in-Picture feature by adding support for subtitles and captions for the Dailymotion, Disney+ Hotstar, Funimation, HBO Max, SonyLIV, and Tubi video streaming services, and further improves the PDF reading mode when using the High Contrast mode.

- 1 comment

Printer-friendly version

Printer-friendly version- Read more

- 22647 reads

PDF version

PDF version

Why I think the GNOME designers are incompetent

Submitted by Roy Schestowitz on Monday 27th of June 2022 08:41:01 AM Filed under

But GNOME folk didn't know how to do this. They don't know how to do window management properly at all. So they take away the title bar buttons, then they say nobody needs title bars, so they took away title bars and replaced them with pathetic "CSD" which means that action buttons are now above the text to which they are responses. Good move, lads. By the way, every written language ever goes from top to bottom, not the reverse. Some to L to R, some go R to L, some do both (boustrophedon) but they all go top to bottom.

The guys at Xerox PARC and Apple who invented the GUI knew this. The clowns at Red Hat don't.

There are a thousand little examples of this. They are trying to rework the desktop GUI without understanding how it works, and for those of us who do know how it works, and also know of alternative designs these fools have never seen, such as RISC OS, which are far more efficient and linear and effective, it's extremely annoying.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22075 reads

PDF version

PDF version

Devices With GNU/Linux and Hardware Hacking

Submitted by Roy Schestowitz on Monday 27th of June 2022 08:39:59 AM Filed under

-

Introducing ESP32-C5: Espressif’s first Dual-Band Wi-Fi 6 MCU

ESP32-C5 packs a dual-band Wi-Fi 6 (802.11ax) radio, along with the 802.11b/g/n standard for backward compatibility. The Wi-Fi 6 support is optimised for IoT devices, as the SoC supports a 20MHz bandwidth for the 802.11ax mode, and a 20/40MHz bandwidth for the 802.11b/g/n mode.

-

Compulab’s new IoT gateway is based on NXP’s i.MX.8M processor and runs on Linux, MS Azure IoT and Node RED [Ed: Compulab should shun spyware from Microsoft. Bad neighbourhood.]

The IOT-GATE-IMX8PLUS is an IoT gateway made by Compulab that is based on the NXP i.MX.8M Plus System on Chip (SoC) for commercial or industrial applications. The device features dual GbE ports, Wi-Fi6/BLE 5.3 support, LTE 4G, GPS and many optional peripherals.

Compulab’s new IoT gateway provides support for two processor models, the C1800Q and the C1800QM. Both come with a real time processor but only the C1800QM includes the AI/ML Neural Processing Unit.

-

Want A Break From Hardware Hacking? Try Bitburner

If you ever mention to a normal person that you’re a hacker, and they might ask you if you can do something nefarious. The media has unfortunately changed the meaning of the word so that most people think hackers are lawless computer geniuses instead of us simple folk who are probably only breaking the laws meant to prevent you from repairing your own electronics. However, if you want a break, you can fully embrace the Hollywood hacker stereotype with Bitburner. Since it is all online, you don’t even have to dig out your hoodie.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 21629 reads

PDF version

PDF version

today's howtos

Submitted by Roy Schestowitz on Monday 27th of June 2022 08:38:47 AM Filed under

-

Split Audio Files into Parts

I recently got in the need of splitting quite large amount of audio files into smaller equal parts. The first thought that came to my mind was – probably thousand or more people had similar problem in the past so its already solved – so I went directly to the web search engine.

The found solutions seem not that great or work partially only … or not work like I expected them to work. After looking at one of the possible solutions in a bash(1) script I started to modify it … but it turned out that writing my own solution was faster and easier … and simpler.

Today I will share with you my solution to automatically split audio files into small equal parts.

-

A Previous Sibling Selector

In natural language, what I wanted was: “select every

element that directly precedes an <hr> element and style the <a> link inside of it.”

I know how to select the next sibiling of an element with div + p.

And I know how to select any adjacent sibling of an element (which follows it) with div ~ p.

And I learned how to select an element when it only has one child with p:only-child a (even though they are the only element on their line, markdown will wrap the [link](#) elements in a paragraph tag).

But how do I select the previous sibling of an element? Something like p:before(hr) which would select all paragraphs that precede an <hr> element.

-

How to Set Static IP Address on Ubuntu 22.04

Hello folks, in this guide, we will cover how to set static ip address on Ubuntu 22.04 (Jammy Jellyfish) step by step.

-

Make a temporary file on Linux with Bash | Opensource.com

When programming in the Bash scripting language, you sometimes need to create a temporary file. For instance, you might need to have an intermediary file you can commit to disk so you can process it with another command. It's easy to create a file such as temp or anything ending in .tmp. However, those names are just as likely to be generated by some other process, so you could accidentally overwrite an existing temporary file. And besides that, you shouldn't have to expend mental effort coming up with names that seem unique. The mktemp command on Fedora-based systems and tempfile on Debian-based systems are specially designed to alleviate that burden by making it easy to create, use, and remove unique files.

-

Use a SystemTap example script to trace kernel code operation | Red Hat Developer

SystemTap allows developers to add instrumentation to Linux systems to better understand the behavior of the kernel as well as userspace applications and libraries. This article, the first in a two-part series, shows how SystemTap can reveal potential performance problems down to individual lines of code. The second part of the series will describe how a SystemTap performance monitoring script was written.

-

How to Add Your Own Custom Color in LibreOffice - Make Tech Easier

While writing or editing text in LibreOffice, there are times where you need to change the color of the text or the background. LibreOffice comes with its own set of color palette that you choose the color from and it is easily accessible from the toolbar. The problem is that if you want to use a custom color which is not available in the palette, you are out of luck because there are no visible options for you to add your own color to the palette.

-

How to Split Vim Workspace Vertically or Horizontally

There is no better computing environment than the one availed by a Linux operating system distribution. This operating system environment gives its users a complete computing experience without too much interaction with the GUI (Graphical User Interface). The more experienced you are with Linux the more time you spend on the Linux command-line environment.

The command-line environment is efficient enough to handle OS-centered tasks like file editing, configuration, and scripting. A command-line text editor like the Vim editor makes it possible to perform such tasks.

-

How to Create AWS VPC Peering in same account/region using Terraform

Amazon VPC peering enables the network connection between the private VPCs to route the traffic from one VPC to another. You can create VPC Peering between your own VPC with the VPC in the same region or a different region or with other VPCs in a different AWS account in a different region.

AWS create peering connection by using the existing infrastructure of the VPC. VPC peering connection is not a form of gateway or VPN connection. It helps to make easy to transfer the data from VPC to VPC.

In this guice, it is assumed that the VPCs that you want to peer have been created. If you need help creating a VPC checkout this guide. We need to create the peering request from the peering owner VPC, accept the peering connection request in the accepter account and update the route tables in both the VPCs with entries for the peering connection from either side.

-

How to Install Darktable on Ubuntu 22.04 LTS - LinuxCapable

Darktable is a free and open-source photography application program and raw developer. Rather than being a raster graphics editor like Adobe Photoshop or GIMP, it comprises a subset of image editing operations specifically aimed at non-destructive raw image post-production. In addition to basic RAW conversion, Darktable is equipped with various tools for basic and advanced image editing. These include exposure correction, color management, white balance, image sharpening, noise reduction, perspective correction, and local retouching. As a result, Darktable is an incredibly powerful tool for photographers of all experience levels. Best of all, it is entirely free to download and use.

-

How to Create a Sudo User on Fedora Linux

Fedora Linux is not a new name in the world of computing. This Linux operating system distribution has Red Hat as its primary sponsor. Red Hat made its development possible via the Fedora Project. Through free and open-source licenses, Fedora hosts a variety of distributed software.

This Linux distribution also functions as an upstream for the Red hat Enterprise Linux Community version. The latter statement implies that the Fedora Project is a direct fork for Red Hat. In other words, Red Hat directly borrows its features’ implementation from Fedora.

-

ulimit command usage in Linux - TREND OCEANS

ulimit command is used by the administrator to limit hardware resources in a pool share and is mainly used by shared hosting providers to curb unwanted hardware resource usage by other tenants.

There are two types of limits that you can set on your Linux machine.

-

How to Install Pinta on Ubuntu 22.04 LTS - LinuxCapable

Pinta is an excellent image editing tool for both novice and experienced users. The user interface is straightforward yet still packed with features. The drawing tools are comprehensive and easy to use, and the wide range of effects makes it easy to add a professional touch to your images. One of the best features of Pinta is the ability to create unlimited layers. This makes it easy to keep your work organized and tidy, which is essential for anyone who wants to develop complex or detailed images. Pinta is a great all-around image editor in every artist’s toolkit.

-

History of Version Control Systems: Part 1

First-generation version control systems made collaboration possible, but it was painful. Deleting, renaming, or creating new files wasn't easily done. Tracking files across multiple directories as part of one project was impossible. Branching and merging were confusing. Locks worked by copying a file with read-only or read-write UNIX permissions. Inevitably, programmers didn't want to wait for someone else to finish editing, so they got around the lock system with a simple chmod.

The two widely used first-generation version control systems were SCCS and RCS.

-

How Learning To Code Can Change Your Life?

The art of “Communicating with Computers” is called coding, It allows us to be able to communicate with computers, and make them do what we want them to. One of the most exciting aspects of learning to code is the potential to bring your ideas to life and that’s how popular games, software, apps, web apps, and various other algorithms are built.

- Login or register to post comments

Printer-friendly version

Printer-friendly version- Read more

- 22078 reads

PDF version

PDF version

More in Tux Machines

- Highlights

- Front Page

- Latest Headlines

- Archive

- Recent comments

- All-Time Popular Stories

- Hot Topics

- New Members

today's howtos

| Red Hat Hires a Blind Software Engineer to Improve Accessibility on Linux Desktop

Accessibility on a Linux desktop is not one of the strongest points to highlight. However, GNOME, one of the best desktop environments, has managed to do better comparatively (I think).

In a blog post by Christian Fredrik Schaller (Director for Desktop/Graphics, Red Hat), he mentions that they are making serious efforts to improve accessibility.

Starting with Red Hat hiring Lukas Tyrychtr, who is a blind software engineer to lead the effort in improving Red Hat Enterprise Linux, and Fedora Workstation in terms of accessibility.

|

Today in Techrights

| Android Leftovers |

Older Stories (Next Page)

- Canonical and IBM Leftovers

- today's howtos

- Programming Leftovers

- 9to5Linux Weekly Roundup: June 26th, 2022

- today's howtos

- LINMOB.net - Weekly #MobilePOSIX Update (25/2022): Better Processing in Megapixels and another report on the PinePhone Pro Cameras

- Audiocasts/Shows: Open Source Security Podcast, GNU World Order, Brodie Robertson, and More

- Review: AlmaLinux OS 9.0

- Linux 5.19-rc4

- GNOME Devs Bring New List View to Nautilus File Manager

- New Version of ArcMenu GNOME Extension Released

- FSFE Information stand at Veganmania MQ

- Android Leftovers

- Stable Kernels: 5.18.7, 5.15.50, 5.10.125, 5.4.201, 4.19.249, 4.14.285, and 4.9.320

- today's howtos

- Linux Mint: The Beginner-Friendly Linux Operating System for Everyone

- today's howtos

- Red Hat / IBM Leftovers

- Programming Leftovers

- Security Leftovers

.svg_.png)

Content (where original) is available under CC-BY-SA, copyrighted by original author/s.

Content (where original) is available under CC-BY-SA, copyrighted by original author/s.

Recent comments

35 weeks 3 days ago

35 weeks 3 days ago

35 weeks 3 days ago

35 weeks 4 days ago

35 weeks 4 days ago

35 weeks 4 days ago

35 weeks 4 days ago

35 weeks 4 days ago

35 weeks 4 days ago

35 weeks 4 days ago